Lately I’ve been enthralled with AI image generation, specifically Stable Diffusion. The idea is that you have a model that relates text to images. The tool generates noise and refines it over steps into an image that relates to the prompt. I’d previously signed up for the beta access to DALL·E 2 and got to try it out. You get a certain amount of free credits per month, which is nice, but AI image generation is a lot about trial-and-error and thus it’s easy to chew through credits while trying to tweak the prompt to get the target image result. I also played around with Stability.Ai’s DreamStudio Lite, which has a similar credit model.

On August 22, 2022 Stability.Ai publicly released their text-to-image model – a big move that opened up lots of options for developers. After trying a few different projects I landed on AUTOMATIC111 / stable-diffusion-webui, which was pretty easy to set up and has optimizations that make it very usable on my NVIDIA video card with 4GB of VRAM. At 512 x 512 pixels, it usually takes between 1-3 seconds per iteration but I’m a patient person and the tools allows for great batching so that you can set up a bunch variations and then check back when done.

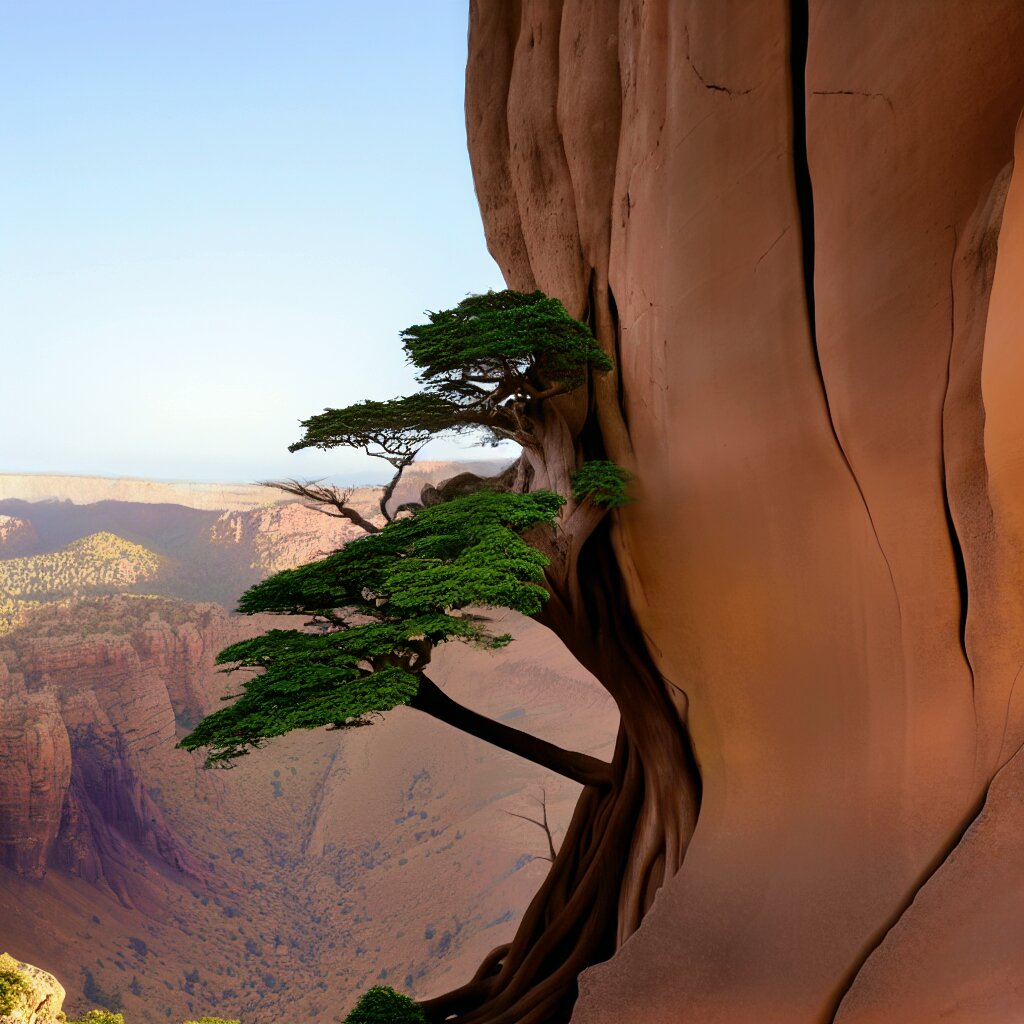

Below are some of the images I’ve generated. These are typically the result of a number of “img2img” iterations – taking the result from the initial text prompt, noising it up a certain percentage and using that plus a a text prompt to nudge the result toward what I had in mind. Sure, it’s fun to just type in something and get results but that’s not satisfying for me.